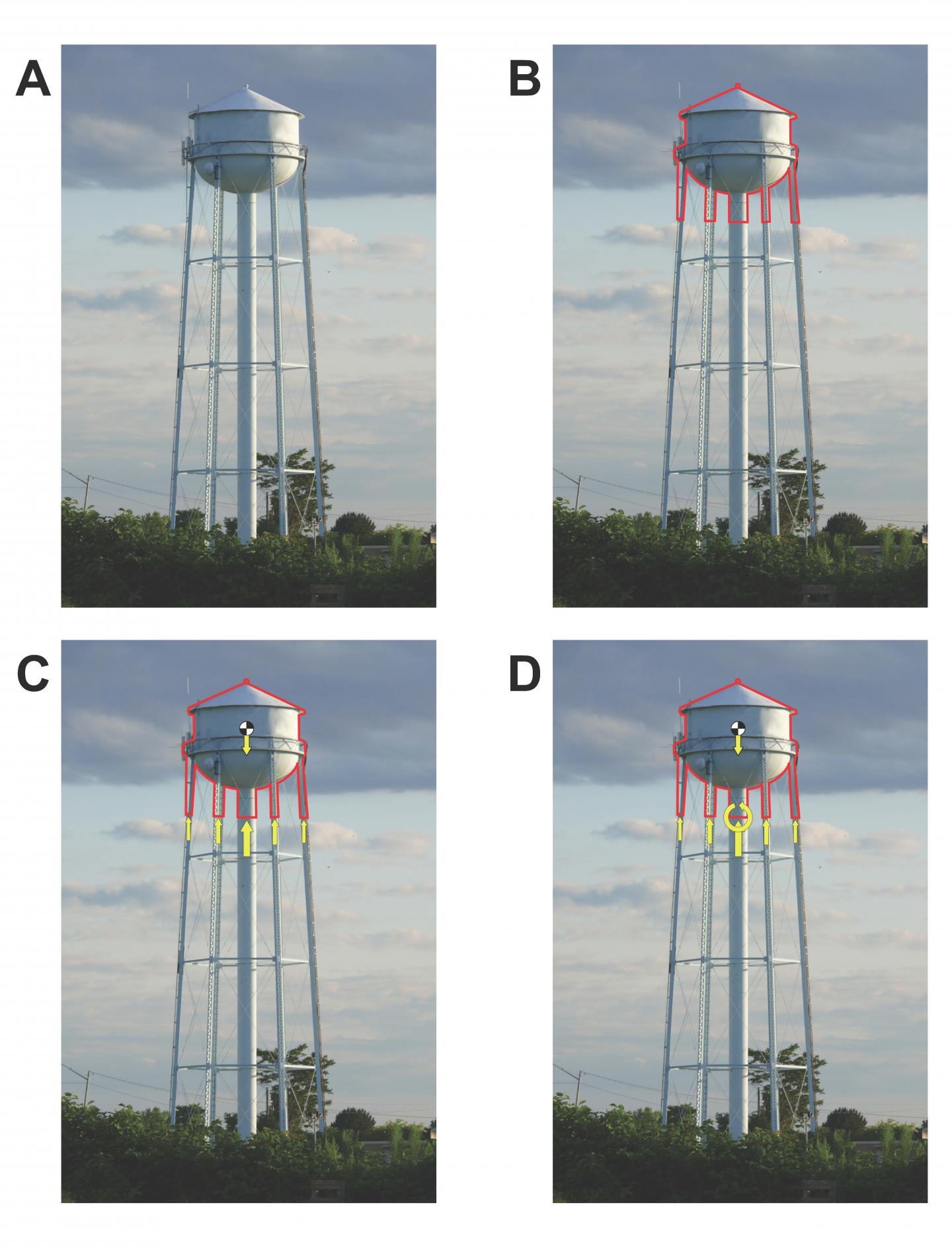

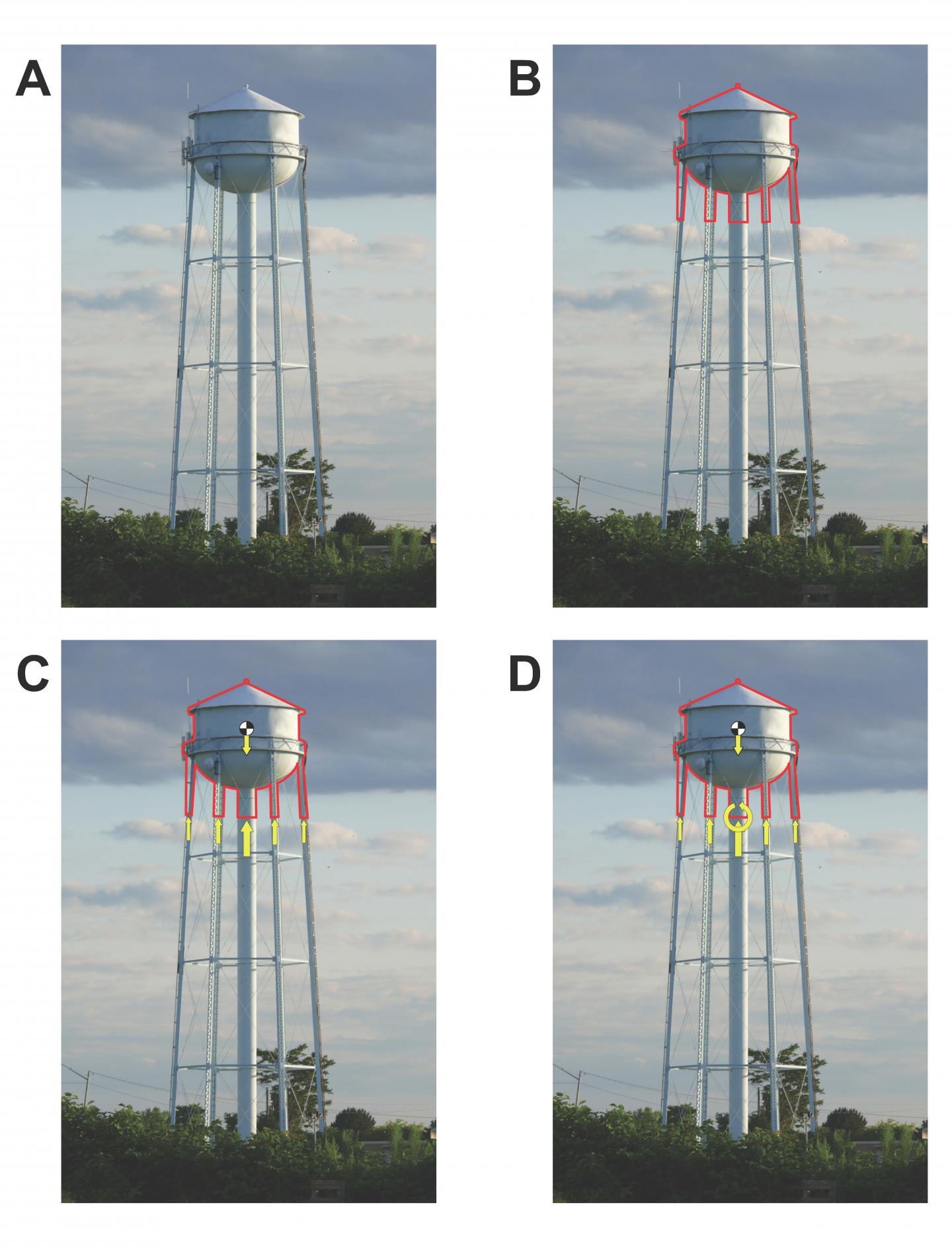

IMAGE: Diagram of how forces affect a water tower. Water tower at Olcott Beach, NY, by Ad Meskens; image was modified by Joshua S. Cetron and used under the Creative Commons… view more

Credit: Water tower at Olcott Beach, NY, by Ad Meskens; image was modified by Joshua S. Cetron and used under the Creative Commons Attribution-ShareAlike 3.0 Unported license.

As students learn a new concept, measuring how well they grasp it has often depended on traditional paper and pencil tests. Dartmouth researchers have developed a machine learning algorithm, which can be used to measure how well a student understands a concept based on his or her brain activity patterns. The findings are published in Nature Communications.

The study is one of the first to look at how knowledge learned in school is represented in the brain. To test knowledge of concepts in STEM, Dartmouth researchers examined how novices and intermediate learners’ knowledge and brain activity compared when testing mechanical engineering and physics concepts, and then developed a new method to assess their conceptual understanding.

“Learning about STEM topics is exciting but it can also be quite challenging. Yet, through the course of learning, students develop a rich understanding of many complex concepts. Presumably, this acquired knowledge must be reflected in new patterns of brain activity. However, we currently don’t have a detailed understanding of how the brain supports this kind of complex and abstract knowledge, so that’s what we set out to study,” said senior author David Kraemer, an assistant professor of education at Dartmouth College.

Twenty-eight Dartmouth students participated in the study, broken into two equal groups: engineering students and novices. Engineering students had taken at least one mechanical engineering course and an advanced physics course, whereas novices had not taken any college-level engineering or physics classes. The study was comprised of three tests, which focused on how structures are built and assessed participants’ understanding of Newton’s third law–for every action there is an equal and opposite reaction. Newton’s third law is often used to describe the interactions of objects in motion, but it also applies to objects that are static, or nonmoving: all of the forces in a static structure need to be in equilibrium, a principle fundamental to understanding whether a structure will collapse under its own weight or whether it can support more weight.

At the start of the study, participants were provided with a brief overview of the different types of forces in mechanical engineering. In an fMRI scanner, they were presented with images of real-world structures (bridges, lampposts, buildings, and more) and were asked to think about how the forces in a given structure balanced out to keep the structure in equilibrium. Then, participants were prompted with a subsequent image of the same structure, where arrows representing forces were overlaid onto the structure. Participants were asked to identify if the Newtonian forces had been labeled correctly in this diagram. Engineering students (intermediate learners) answered 75 percent of the diagrams correctly and outperformed the novices, who answered 53.6 percent correctly.

Before the fMRI session, participants were also asked to complete two standardized, multiple-choice tests that measured other mechanical engineering and physics knowledge. For both tests, the engineering students had significantly higher scores than the novices with 50.2 percent versus 16.9 percent, and 79.3 percent versus 35.9 percent.

In cognitive neuroscience, studies on how information is stored in the brain often rely on averaging data across participants within a group, and then comparing their results to those from another group (such as experts versus novices). For this study, the Dartmouth researchers wanted to devise a data-driven method, which could generate an individual “neural score” based on the brain activity alone, without having to specify which group the participant was a part of. The team created a new method called an informational network analysis, a machine learning algorithm which “produced neural scores that significantly predicted individual differences in performance” testing knowledge of specific STEM concepts. To validate the neural score method, the researchers compared each student’s neural score with his/her performance on the three tests. The results demonstrated that the higher the neural score, the higher the student scored on the concept knowledge tests.

“In the study, we found that when engineering students looked at images of real-world structures, the students would automatically apply their engineering knowledge, and would see the differences between structures such as whether it was a cantilever, truss or vertical load,” explained Kraemer. “Based on the similarities in brain activity patterns, our machine learning algorithm method was able to distinguish the differences between these mechanical categories and generate a neural score that reflected this underlying knowledge. The idea here is that an engineer and novice will see something different when they look at a photograph of a structure, and we’re picking up on that difference,” he added.

The study found that while both engineering students and novices use the visual cortex similarly when applying concept knowledge about engineering, they use the rest of the brain very differently to process the same visual image. Consistent with prior research, the results demonstrated that the engineering students’ conceptual knowledge was associated with patterns of activity in several brain regions, including the dorsal frontoparietal network that helps enable spatial cognition, and regions of ventral occipitotemporal cortex that are implicated in visual object recognition and category identification.

The informational network analysis could also have broader applications, as it could be used to evaluate the effectiveness of different teaching approaches. The research team is currently testing the comparison between hands-on labs versus virtual labs to determine if either approach leads to better learning and retention of knowledge over time.

###

Kraemer is available for comment at: david.j.m.kraemer@dartmouth.edu. The other co-authors from Dartmouth College include: Joshua S. Cetron, a former Dartmouth undergraduate student and currently a Harvard University graduate student; Andrew C. Connolly; Solomon G. Diamond and Vicki V. May of the Thayer School of Engineering; and James V. Haxby.

Disclaimer: AAAS and EurekAlert! are not responsible for the accuracy of news releases posted to EurekAlert! by contributing institutions or for the use of any information through the EurekAlert system.