I’ve been to the bagel place down the street from my apartment maybe a hundred times. The staff is friendly. I like the food. They’re always open.

Lots of people have their own opinions about it. Googling “Smith Street Bagels” produces pages of listings, most accompanied by star ratings visible right there in the results, in bright Google orange, from places like Seamless, Yelp and Google itself. “I search maybe five or six sites every week, just to see what flow is going on,” said Juan Alicea, who manages the Brooklyn shop.

Each website has its idiosyncrasies. Yelp reviewers write like restaurant reviewers. (“Yelp is where people like to complain,” said Mr. Alicea.) Foursquare users rate on a scale of 0 to 10, which Google converts to stars, and pass along tips. Reviewers at Find Me Gluten Free focus on the question of kitchen cross-contamination, which is more of an up-down sort of judgment, but those become stars as well. Mapquest’s reviews are pulled in from Yelp, though you wouldn’t know that unless you checked; they show up in Google results as Google stars. You may know that Seamless and GrubHub are the same company, and notice that they list the same star review, with the same number of reviews, but that would not explain why that rating appears three times in Google’s results, once under each service, and once under an ad.

The delivery apps present a particular problem: Seamless and GrubHub customers, even those who live close by, may have never set foot in the store, so their reviews may focus solely on the speed and ease of their transaction. And Smith Street Bagels, like many small businesses in New York, doesn’t employ its own delivery people. Instead, it uses a service called Relay, which matches delivery drivers with nearby restaurants.

“We can make a sandwich or an order within two minutes, but we still have to wait an extra 10 or 15 minutes for the driver to actually come to us,” Mr. Alicea said. “If the eggs get there cold, it’s a bad delivery for us.” This may also mean a bad review, which will be factored into the first star rating a Google searcher might see, not to mention into the rating in the Seamless app — a major source of revenue — and beyond. Last week, Mr. Alicea said, a dissatisfied Seamless reviewer left a review on Yelp. (While restaurants tend to bear the brunt of a bad Seamless experience, a sufficiently peeved customer will sometimes leave a review of Seamless itself in the App Store, on a five-star scale.)

In the case of the cold Seamless order, there is limited recourse for the restaurant. Aside from reaching out to the disgruntled reviewer to make nice, they can rate the delivery person on Relay. “It’s a five-star system,” Mr. Alicea said. “If you give them one star, you won’t get that driver again.”

My neighborhood bagel shop, in other words, exists in a world ruled by stars. Though ratings of this kind have existed for well over a century, the internet has given them new power to convey, and accomplish, countless different meanings and functions.

Star ratings, meant to serve as a shorthand for written reviews, now require significant context to be comprehensible. Three stars for a restaurant might be excellent or middling, depending on the platform. On Uber, a 4.3-star average might mean a driver is at risk of getting booted from the system, while on Amazon 4.3 stars might be the result of a hard-fought and expensive campaign to climb to the first page of the search results for “Bluetooth headphones.” A two-star restaurant might have mice, or its owner might have just made the news.

Stars are complicated fictions strutting through the world with a sense of meaning, tugged in every direction by forces over which they have little control, used and abused by systems in which they’re important until they’re not. Stars: They’re just like us!

The Amazon Experiment

Amazon is pushing the capacity of the star to its absolute limit. And interpreting its ratings, in 2019, a crucial but labor-intensive consumer skill. They’re category-specific: A three-star shirt might make your skin itch, or it might just have unusual sizing. A three-star masterpiece of a book might just have divisive politics. A three-star electronic device might work perfectly well, but ship slowly from overseas. You can’t really know unless you read the written reviews.

On Amazon, stars are influenced by less visible factors, too. For Amazon vendors and sellers, star ratings serve a variety of functions. Without reviews, a product isn’t likely to show up in searches; without a good star rating, it isn’t like to be clicked at all. The stakes are high, and the stars themselves are more important than how they’re made. Vendors (those are products you see as “sold by Amazon”) can distribute their merchandise to semiprofessional reviewers through the Vine program, where users provide reviews in exchange for free products. Everyday sellers, for the time being, can’t. Competition is fierce. Options are limited. The inevitable result is fraud.

“You look at Amazon reviews before 2013, and a majority of reviews are very trustworthy,” said Saoud Khalifah, the founder of Fakespot, a company that analyzes review sites. “After 2014, 2015,” however, “when they opened up the marketplace, sellers started engaging in new tactics,” he said. Sellers might offer prospective reviewers a free product in exchange for a review, or pay them outright. They might include a note with a package, enticing customers to email a secret email address; at the other end the customer might find someone willing to refund the purchase, if they write a review. “We’ve seen sellers on Amazon pump other pages with five-star ratings,” Mr. Khalifah said. The goal? To get competitors banned from the platform.

On Amazon, the star rating is both fundamentally flawed and increasingly empowered. It’s still a visible signal to customers and sellers. Behind the scenes, it also helps determine what gets seen in the first place, how items are sorted and, ultimately, what sells. A star rating is a star rating, whether it’s composed of hundreds of uniform reviews, or just three, or of an erratic mix of highs and lows, resulting in the same average.

And about that “average,” anyway: It’s not raw, or any sort of “average” at all. “Amazon calculates a product’s star ratings based on a machine learned model instead of a raw data average,” the company tells customers. “The model takes into account factors including the age of a rating, whether the ratings are from verified purchasers, and factors that establish reviewer trustworthiness.”

So even before we begin our interpretations of Amazon’s star reviews, Amazon’s “machine learned model” has interpreted them for us. Or at least for itself.

Just as the most important context for an Amazon review might not be the online storefront, where a customer sees it, the most important context for that Seamless star average might not be a Google result, or even how it appears in the Seamless app. The real context is how the Seamless algorithm interprets stars and uses them to determine whether to show a prospective meal-orderer one establishment over another.

The Uber Trial

Nowhere is this mutated, empowered role of the star rating clearer than in the gig economy. “Star ratings are an enforcement tool that Uber has for how users should behave on the job,” said Alex Rosenblat, a researcher at Data & Society and the author of “Uberland.” Star ratings are integral to Uber’s operation, but how they appear is subtly and significantly calculated. Riders will see their driver’s star rating before they get in the car, but only after the driver has been assigned to them; there is no actionable comparison, or reading of reviews, or customer evaluation. That’s taken care of by Uber’s own software, behind the scenes. (Drivers can see riders’ ratings before they choose to accept jobs. However, turning down too many jobs can affect their status in the app as well, although not in the form of star ratings.)

Uber drivers are contractors, not full-time employees. This is both integral to Uber’s business model and, in different markets and courtrooms around the world, currently in dispute. In the meantime star ratings provide a conduit through which Uber can assertively command and micromanage its “partners.”

Drivers receive communications from Uber not about what Uber wants them to do, exactly, but about what five-star drivers do. Rather than telling its drivers to open doors, or not to solicit cash tips, or to stock water bottles in their cars, or not to ask for a five-star review, Uber can tell its drivers that users have shared that they prefer some behaviors, and dislike others, and that acting on these observations is a way to optimize their own ratings. The possible upside is limited to remaining on the app, and perhaps being privy to certain driver promotions.

In addition to the quality of the ride, and, say, the riders’ moods, or feelings about Uber in general, a crude star rating system might serve as a “facially neutral route” for discrimination to manifest in the platform, and to eventually “creep in” to employment decisions, according to a paper to which Ms. Rosenblat contributed.

Contrary to the star rating as a symbol of the wisdom of the crowd, or the circumvention of critical gatekeepers, Uber’s system seems mostly to empower Uber, as a marketing tool and as a system of control. How else to explain a five-star scale whereby customers never see a rating lower than a 4, while many working drivers live in a state of constant anxiety about how they will be reviewed?

In a 2015 study of ratings on Airbnb, researchers at Boston University found, similarly, that 95 percent of surveyed properties had ratings of 4.5 or above. “Hosts may take great pains to avoid negative reviews,” they wrote, “ranging from rejecting guests that they deem unsuitable, to pre-empting a suspected negative review with a positive ‘pre-ciprocal’ review, to resetting a property’s reputation with a fresh property page when a property receives too many negative reviews.” Any user or host on the service can attest to the peculiar, hyper-positive tone of communication within the app, as guests and hosts test one another’s commitment to the silent Airbnb user pact: part “I won’t tell if you don’t” and part “snitches get stitches.” (Airbnb, the platform, is “Mom” and “the cops” in these scenarios. )

The Vast Google Map of the Stars

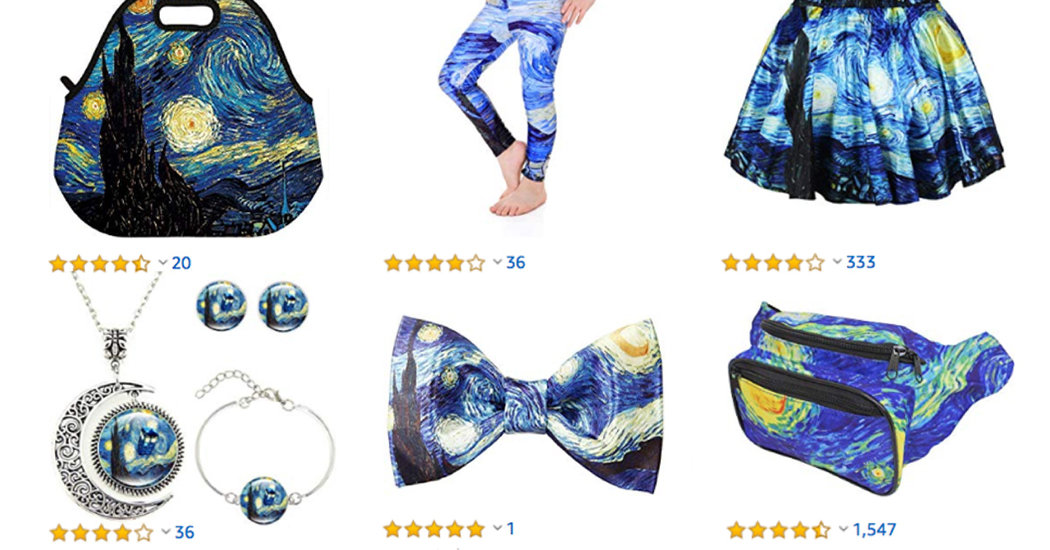

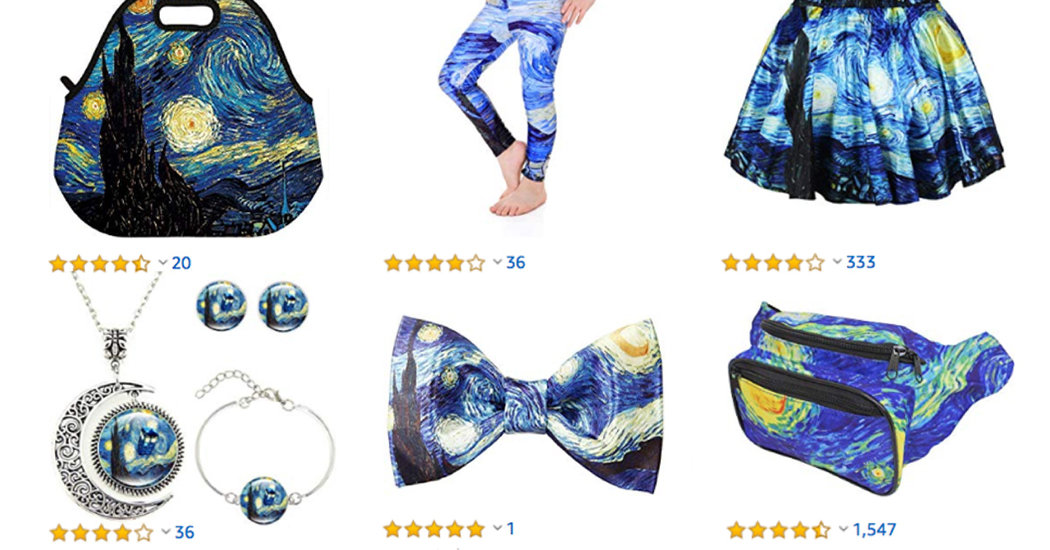

As the starred review has become a more potent tool for companies, its usefulness for users has waned. A retailer trying to catch up with Amazon might use high overall product ratings, or sheer volume of reviews, as a subtle marketing tool. Reviews on Walmart are sometimes sourced not just from its own customers, but from outside product sites — a meaningful portion of the thousands of reviews for Pampers products, for example, are copied from Pampers.com, star ratings included.

Elsewhere on screens, stars shine abundantly. Perhaps no company has embraced the power of the star as thoroughly as Google. The company oversees countless products and services that use star ratings of some sort: Google Maps, Google Shopping and the Chrome and Android App Stores, where other star-giving entities are themselves rated with Google stars.

The company’s greatest effect on the star rating may be the way in which it has made them central to Google Search. Those pages of bagel shop reviews come from a variety of different sources, each with its own priorities, flaws and populations of reviewers. Each has taken steps to allow its star reviews to show up in Google Search, through what Google calls a “review snippet.”

Stars, it turns out, are a format that Google is eager to vacuum up given the slightest guidance. “When Google finds valid reviews or ratings markup, we may show a rich snippet that includes stars and other summary info from reviews or ratings,” Google tells developers. Including such snippets means that your Google Search result may show up with stars — a way to set it apart, visually, from other results, and to hopefully drive searchers to your site over others.

In Google Search folklore, this has translated approximately as: Stars are a way to get clicks. Naturally, there are guides. A story headlined “HOW TO GET YOUR BUSINESS STAR RATINGS IN GOOGLE SEARCH RESULTS” on the website of search engine consultancy Hit Search tells readers: “Have you ever seen one of your competitors with stars and ratings information appear in the search results and wondered how they got them? Well, these are organic star ratings and here’s a handy little guide to improve your chances of getting these stars next to your listings!”

The recommendations work, at least in this case: The link to this article shows up in Google’s search results with a 4.7 star rating, according to itself, rendered in Google orange, the same rating as my bagel place. At least, according to Facebook, according to Google.